Sorry, Dr. Coughlin, “Aging” and “Old Age” Are Real, and They Suck – Article by Dan Elton

Dan Elton, Ph.D.

Author’s Note: This article was also posted on my Substack.

Coughlin’s audience spontaneously started clapping when he proclaimed that “old age” is made up in 2021. He repeats this mantra often.

Recently I attended a talk at the Broad Institute by Joseph F. Coughlin, who runs the “AgeLab” at MIT. I was shocked and disturbed by what I heard. In Coughlin’s view of reality, the elderly do not actually become less capable as they age, it’s just that society thinks they do. According to Coughlin, the elderly are unfairly cast out from society, forced to spend the rest of their days cooped up in nursing homes and retirement communities where they are treated as delicate creatures that need to rest. In Coughlin’s view, any talk of problems associated with “old age” perpetuates harmful stereotypes. Aging, Coughlin insists, is not actually a problem.

Coughlin began his talk by claiming that our entire conception of “old age” is based on a faulty theory about “vital energy” that was popularized in the 1800s. According to this theory, people used up “vital energy” by doing things like work and having sex, eventually reaching a “low battery state” where they needed to rest and conserve what little energy they had left. This faulty theory, according to Coughlin, is responsible for the belief that people become more fatigued as they get older, as well as the belief that people should retire so they can rest and conserve what little vital energy they have left.1 According to Coughlin, both of these beliefs are faulty.

Most shockingly, Coughlin boldly proclaimed that “aging is not a problem to be solved”. My jaw literally dropped upon hearing this, in disbelief at the chutzpah and utter disregard for reality on display.

Coughlin’s talk was very disrespectful to millions of people worldwide who are suffering from the ravages of aging. This issue is personal for me. I watched my grandmother struggle with severe dementia for years along with mobility issues and glaucoma. I also watched my grandfather’s quality of life slowly decline due to aging, robbing him of his mobility, quality of life, and dignity.

I challenged Dr. Coughlin on some of his points after his talk, but rather than admitting to some of the realities of aging he doubled down. He re-iterated his view that aging is “not a problem” and is a socially constructed concept. He wouldn’t accept my contention that aging is the underlying driver of all the diseases of old age.

Coughlin might like to pretend that problems of aging don’t actually exist, but biological reality is that people do become more sicker, less energetic, and less capable over time. Aging directly causes cancer, cardiovascular disease, arthritis, muscle loss, frailty, cataracts, macular degeneration, urinary incontinence, hearing loss, decreased libido, loss of taste and smell, weakened voice, Alzheimer’s disease, Parkinson’s, wrinkly skin, hair loss, mental retardation, memory loss, and a greatly weakened immune system.

While it is true that people are living longer now than decades ago and receiving better medical care, the problem of aging is still very much with us. In fact, aging is a larger problem today since we can keep people alive longer with medical interventions. These interventions (like stents, pacemakers, etc.) keep people alive but they don’t halt aging, so people now experience the horrible ravages of aging for longer than ever before.

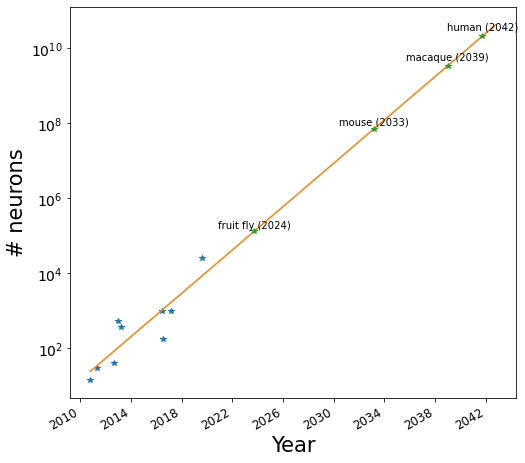

Aging is not a slow, gradual decline. Rather, it’s something that picks of pace rapidly after age 65. The incidence of most of the worst diseases caused by aging increases exponentially with age. Age 65 is right around the “knee” or “turning point” of that exponential curve. It makes sense biologically to say those over 65 are entering “old age”. Consider that only 10% of people die between age 1 and age 65, but 20% of people will die in the next 10 years after that. Between 75 to 85, 30% more people will die. Things go downhill quickly after age 65.

Of course we shouldn’t assume any one individual isn’t capable simply because they are over 65. Noam Chomsky is still intellectually engaged at age 94. A few individuals are able to complete triathlons in their 90s. There is clearly significant variation in the rates at which people age due to genetics and other factors. At the same time as we acknowledge this we must not turn a blind eye away from the reality of how aging effects most people. Reality is that most of us are going to suffer from numerous issues as we enter old age. In fact, as much as it pains me to say this, a significant fraction of people reading this are going to die a slow, painful death unless we as a society get serious about treating and reversing aging.

Who is this guy, and how did he become an authority on longevity?

Dr. Coughlin, who has a Ph.D. in transportation engineering, created the MIT Age Lab in 1999. Always in a suit and bowtie, he projects a confident, authoritative presence. He is an energetic and engaging speaker. It’s not hard to see how after 20+ years of giving his traveling roadshow he has become a respected thought leader on longevity, despite having no formal training on the subject.

The core of Coughlin’s message is that aging isn’t a problem and that the elderly are unfairly stigmatized. It’s not hard to see why this message is popular. People don’t want to think about all of the horrible effects of aging they will very likely have to endure later in their life. People prefer to focus on the stories of people who thrive in their 80s and 90s rather than those suffering long drawn-out deaths in hospitals and nursing homes. Talk about “socially constructed categories” sounds intellectually sophisticated as well.

Now to be fair, Coughlin makes a lot of good points in his talks. He often talks about the loss of meaning and purpose many experience after retirement. He talks about how products for the elderly often use drab neutral “healthcare” color schemes like teal and beige. His book The Longevity Economy is about how to market to the growing number of older people, which he says is an often overlooked demographic. He seems to see longevity biotechnology in a positive light.

Coughlin was appointed by President George W. Bush to the White House Advisory Committee on Aging in 2005. More recently he was appointed by Governor Charlie Baker to the Governor’s Council on Aging in Massachusetts. Unfortunately, this is man who continues to wield power and influence over the direction of healthcare policy in the United States. Otherwise, I wouldn’t have bothered writing this post.

It’s not just Coughlin…

Putting Coughlin aside, I’d like to also point out that many doctors today share Coughlin’s view that aging is not a problem. In fact, I spoke to one such doctor at the event. This dismissive attitude is widespread in the medical establishment and requires forceful disruption to change. In Nick Bostrom’s Fable of the Dragon-Tyrant, for generations people accept the fact that a tyrannical dragon requires regular human sacrifice. When something is accepted as a “normal fact of life”, nobody even contemplates how things might be different. This is exactly the state of things today with respect to aging.

Many people also worry that talking about “aging as a disease” will reinforce unfair stereotypes.2 For instance Cathal McCrory and Rose Anne Kenny wrote the following in a letter to The Lancet: Diabetes and Endocrinology.

“we feel that labelling ageing as a disease serves to reinforce ageist stereotypes and risks legitimising insidious prejudice and discrimination of older people on the basis of age.”

Of course, “ageism” is a massive and thorny problem, just like sexism and racism, and I admit that more honest talk about the realities of aging could exacerbate this problem. At the same time, more honest talk about the reality of aging could also lead to more compassion towards and better social accommodations for the elderly. Regardless, the best path forward here is not to bury our heads in the sand and pretend that aging doesn’t exist. We must confront both problems (aging and ageism) head on. The ultimate solution towards eliminating ageism would be to cure aging itself.

Appendix: biological reality

At a high level aging is very simple – it is the accumulation of damage over time. Aging is well understood and characterized in terms of specific biological processes. A famous 2013 paper called “The Hallmarks of Aging” identified four “primary hallmarks” that cause damage in the body – genome instability, telomere shortening, epigenetic alterations, and loss of proteostasis. Aubrey de Grey famously broke aging into seven specific damage causing-processes – extracellular aggregates, accumulation of senescent cells, extracellular matrix stiffening, intracellular aggregates, mitochondrial mutations, cancerous cells, and cell loss. Karl Pfleger’s very thorough review identifies 18 causes. There is substantial overlap between these lists.3 To the extent there is controversy about the causes of aging, it is mostly around whether there are any more fundamental processes that drive the different damage-causing processes that have been identified.

Let’s look at some graphs that show the acceleration of aging’s harmful effects after age 65 – 75:

Risk of death vs. age

Energy expenditure vs. age

Cancer incidence vs. age

Musculoskeletal conditions vs. age (back pain, arthritis, etc)

Cognitive ability vs. age

VO2 max vs. age (a measure of max cardiovascular output)

Grip strength vs. age

Footnotes

Dan Elton, Ph. D., is Director of Scholarship for the U.S. Transhumanist Party. You can find him on Twitter at @moreisdifferent and on LinkedIN. If you like his content, check out his website and consider subscribing to his newsletter on Substack.